Northwest of Beijing’s Forbidden City, outside the Third Ring Road, the Chinese Academy of Sciences has spent seven decades building a campus of national laboratories. Near its center is the Institute of Automation, a sleek silvery-blue building surrounded by camera-studded poles. The institute is a basic research facility. Its computer scientists inquire into artificial intelligence’s fundamental mysteries. Their more practical innovations—iris recognition, cloud-based speech synthesis—are spun off to Chinese tech giants, AI start-ups, and, in some cases, the People’s Liberation Army.

I visited the institute on a rainy morning in the summer of 2019. China’s best and brightest were still shuffling in post-commute, dressed casually in basketball shorts or yoga pants, AirPods nestled in their ears. In my pocket, I had a burner phone; in my backpack, a computer wiped free of data—standard precautions for Western journalists in China. To visit China on sensitive business is to risk being barraged with cyberattacks and malware. In 2019, Belgian officials on a trade mission noticed that their mobile data were being intercepted by pop-up antennae outside their Beijing hotel.

After clearing the institute’s security, I was told to wait in a lobby monitored by cameras. On its walls were posters of China’s most consequential postwar leaders. Mao Zedong loomed large in his characteristic four-pocket suit. He looked serene, as though satisfied with having freed China from the Western yoke. Next to him was a fuzzy black-and-white shot of Deng Xiaoping visiting the institute in his later years, after his economic reforms had set China on a course to reclaim its traditional global role as a great power.

The lobby’s most prominent poster depicted Xi Jinping in a crisp black suit. China’s current president and the general secretary of its Communist Party has taken a keen interest in the institute. Its work is part of a grand AI strategy that Xi has laid out in a series of speeches akin to those John F. Kennedy used to train America’s techno-scientific sights on the moon. Xi has said that he wants China, by year’s end, to be competitive with the world’s AI leaders, a benchmark the country has arguably already reached. And he wants China to achieve AI supremacy by 2030.

Xi’s pronouncements on AI have a sinister edge. Artificial intelligence has applications in nearly every human domain, from the instant translation of spoken language to early viral-outbreak detection. But Xi also wants to use AI’s awesome analytical powers to push China to the cutting edge of surveillance. He wants to build an all-seeing digital system of social control, patrolled by precog algorithms that identify potential dissenters in real time.

China’s government has a history of using major historical events to introduce and embed surveillance measures. In the run-up to the 2008 Olympics in Beijing, Chinese security services achieved a new level of control over the country’s internet. During China’s coronavirus outbreak, Xi’s government leaned hard on private companies in possession of sensitive personal data. Any emergency data-sharing arrangements made behind closed doors during the pandemic could become permanent.

China already has hundreds of millions of surveillance cameras in place. Xi’s government hopes to soon achieve full video coverage of key public areas. Much of the footage collected by China’s cameras is parsed by algorithms for security threats of one kind or another. In the near future, every person who enters a public space could be identified, instantly, by AI matching them to an ocean of personal data, including their every text communication, and their body’s one-of-a-kind protein-construction schema. In time, algorithms will be able to string together data points from a broad range of sources—travel records, friends and associates, reading habits, purchases—to predict political resistance before it happens. China’s government could soon achieve an unprecedented political stranglehold on more than 1 billion people.

Early in the coronavirus outbreak, China’s citizens were subjected to a form of risk scoring. An algorithm assigned people a color code—green, yellow, or red—that determined their ability to take transit or enter buildings in China’s megacities. In a sophisticated digital system of social control, codes like these could be used to score a person’s perceived political pliancy as well.

A crude version of such a system is already in operation in China’s northwestern territory of Xinjiang, where more than 1 million Muslim Uighurs have been imprisoned, the largest internment of an ethnic-religious minority since the fall of the Third Reich. Once Xi perfects this system in Xinjiang, no technological limitations will prevent him from extending AI surveillance across China. He could also export it beyond the country’s borders, entrenching the power of a whole generation of autocrats.

[From the October 2018 issue: Why technology favors tyranny]

China has recently embarked on a number of ambitious infrastructure projects abroad—megacity construction, high-speed rail networks, not to mention the country’s much-vaunted Belt and Road Initiative. But these won’t reshape history like China’s digital infrastructure, which could shift the balance of power between the individual and the state worldwide.

American policy makers from across the political spectrum are concerned about this scenario. Michael Kratsios, the former Peter Thiel acolyte whom Donald Trump picked to be the U.S. government’s chief technology officer, told me that technological leadership from democratic nations has “never been more imperative” and that “if we want to make sure that Western values are baked into the technologies of the future, we need to make sure we’re leading in those technologies.”

Despite China’s considerable strides, industry analysts expect America to retain its current AI lead for another decade at least. But this is cold comfort: China is already developing powerful new surveillance tools, and exporting them to dozens of the world’s actual and would-be autocracies. Over the next few years, those technologies will be refined and integrated into all-encompassing surveillance systems that dictators can plug and play.

The emergence of an AI-powered authoritarian bloc led by China could warp the geopolitics of this century. It could prevent billions of people, across large swaths of the globe, from ever securing any measure of political freedom. And whatever the pretensions of American policy makers, only China’s citizens can stop it. I’d come to Beijing to look for some sign that they might.

This techno-political moment has been long in the making. China has spent all but a few centuries of its 5,000-year history at the vanguard of information technology. Along with Sumer and Mesoamerica, it was one of three places where writing was independently invented, allowing information to be stored outside the human brain. In the second century a.d., the Chinese invented paper. This cheap, bindable information-storage technology allowed data—Silk Road trade records, military communiqués, correspondence among elites—to crisscross the empire on horses bred for speed by steppe nomads beyond the Great Wall. Data began to circulate even faster a few centuries later, when Tang-dynasty artisans perfected woodblock printing, a mass-information technology that helped administer a huge and growing state.

As rulers of some of the world’s largest complex social organizations, ancient Chinese emperors well understood the relationship between information flows and power, and the value of surveillance. During the 11th century, a Song-dynasty emperor realized that China’s elegant walled cities had become too numerous to be monitored from Beijing, so he deputized locals to police them. A few decades before the digital era’s dawn, Chiang Kai-shek made use of this self-policing tradition, asking citizens to watch for dissidents in their midst, so that communist rebellions could be stamped out in their infancy. When Mao took over, he arranged cities into grids, making each square its own work unit, where local spies kept “sharp eyes” out for counterrevolutionary behavior, no matter how trivial. During the initial coronavirus outbreak, Chinese social-media apps promoted hotlines where people could report those suspected of hiding symptoms.

[Read: China’s surveillance state should scare everyone]

Xi has appropriated the phrase sharp eyes, with all its historical resonances, as his chosen name for the AI-powered surveillance cameras that will soon span China. With AI, Xi can build history’s most oppressive authoritarian apparatus, without the manpower Mao needed to keep information about dissent flowing to a single, centralized node. In China’s most prominent AI start-ups—SenseTime, CloudWalk, Megvii, Hikvision, iFlytek, Meiya Pico—Xi has found willing commercial partners. And in Xinjiang’s Muslim minority, he has found his test population.

The Chinese Communist Party has long been suspicious of religion, and not just as a result of Marxist influence. Only a century and a half ago—yesterday, in the memory of a 5,000-year-old civilization—Hong Xiuquan, a quasi-Christian mystic converted by Western missionaries, launched the Taiping Rebellion, an apocalyptic 14-year campaign that may have killed more people than the First World War. Today, in China’s single-party political system, religion is an alternative source of ultimate authority, which means it must be co-opted or destroyed.

By 2009, China’s Uighurs had become weary after decades of discrimination and land confiscation. They launched mass protests and a smattering of suicide attacks against Chinese police. In 2014, Xi cracked down, directing Xinjiang’s provincial government to destroy mosques and reduce Uighur neighborhoods to rubble. More than 1 million Uighurs were disappeared into concentration camps. Many were tortured and made to perform slave labor.

Uighurs who were spared the camps now make up the most intensely surveilled population on Earth. Not all of the surveillance is digital. The Chinese government has moved thousands of Han Chinese “big brothers and sisters” into homes in Xinjiang’s ancient Silk Road cities, to monitor Uighurs’ forced assimilation to mainstream Chinese culture. They eat meals with the family, and some “big brothers” sleep in the same bed as the wives of detained Uighur men.

Meanwhile, AI-powered sensors lurk everywhere, including in Uighurs’ purses and pants pockets. According to the anthropologist Darren Byler, some Uighurs buried their mobile phones containing Islamic materials, or even froze their data cards into dumplings for safekeeping, when Xi’s campaign of cultural erasure reached full tilt. But police have since forced them to install nanny apps on their new phones. The apps use algorithms to hunt for “ideological viruses” day and night. They can scan chat logs for Quran verses, and look for Arabic script in memes and other image files.

Uighurs can’t use the usual work-arounds. Installing a VPN would likely invite an investigation, so they can’t download WhatsApp or any other prohibited encrypted-chat software. Purchasing prayer rugs online, storing digital copies of Muslim books, and downloading sermons from a favorite imam are all risky activities. If a Uighur were to use WeChat’s payment system to make a donation to a mosque, authorities might take note.

The nanny apps work in tandem with the police, who spot-check phones at checkpoints, scrolling through recent calls and texts. Even an innocent digital association—being in a group text with a recent mosque attendee, for instance—could result in detention. Staying off social media altogether is no solution, because digital inactivity itself can raise suspicions. The police are required to note when Uighurs deviate from any of their normal behavior patterns. Their database wants to know if Uighurs start leaving their home through the back door instead of the front. It wants to know if they spend less time talking to neighbors than they used to. Electricity use is monitored by an algorithm for unusual use, which could indicate an unregistered resident.

Uighurs can travel only a few blocks before encountering a checkpoint outfitted with one of Xinjiang’s hundreds of thousands of surveillance cameras. Footage from the cameras is processed by algorithms that match faces with snapshots taken by police at “health checks.” At these checks, police extract all the data they can from Uighurs’ bodies. They measure height and take a blood sample. They record voices and swab DNA. Some Uighurs have even been forced to participate in experiments that mine genetic data, to see how DNA produces distinctly Uighurlike chins and ears. Police will likely use the pandemic as a pretext to take still more data from Uighur bodies.

Uighur women are also made to endure pregnancy checks. Some are forced to have abortions, or get an IUD inserted. Others are sterilized by the state. Police are known to rip unauthorized children away from their parents, who are then detained. Such measures have reduced the birthrate in some regions of Xinjiang more than 60 percent in three years.

When Uighurs reach the edge of their neighborhood, an automated system takes note. The same system tracks them as they move through smaller checkpoints, at banks, parks, and schools. When they pump gas, the system can determine whether they are the car’s owner. At the city’s perimeter, they’re forced to exit their cars, so their face and ID card can be scanned again.

[Read: Uighurs can’t escape Chinese repression, even in Europe]

The lucky Uighurs who are able to travel abroad—many have had their passports confiscated—are advised to return quickly. If they do not, police interrogators are dispatched to the doorsteps of their relatives and friends. Not that going abroad is any kind of escape: In a chilling glimpse at how a future authoritarian bloc might function, Xi’s strongman allies—even those in Muslim-majority countries such as Egypt—have been more than happy to arrest and deport Uighurs back to the open-air prison that is Xinjiang.

Xi seems to have used Xinjiang as a laboratory to fine-tune the sensory and analytical powers of his new digital panopticon before expanding its reach across the mainland. CETC, the state-owned company that built much of Xinjiang’s surveillance system, now boasts of pilot projects in Zhejiang, Guangdong, and Shenzhen. These are meant to lay “a robust foundation for a nationwide rollout,” according to the company, and they represent only one piece of China’s coalescing mega-network of human-monitoring technology.

China is an ideal setting for an experiment in total surveillance. Its population is extremely online. The country is home to more than 1 billion mobile phones, all chock-full of sophisticated sensors. Each one logs search-engine queries, websites visited, and mobile payments, which are ubiquitous. When I used a chip-based credit card to buy coffee in Beijing’s hip Sanlitun neighborhood, people glared as if I’d written a check.

All of these data points can be time-stamped and geo-tagged. And because a new regulation requires telecom firms to scan the face of anyone who signs up for cellphone services, phones’ data can now be attached to a specific person’s face. SenseTime, which helped build Xinjiang’s surveillance state, recently bragged that its software can identify people wearing masks. Another company, Hanwang, claims that its facial-recognition technology can recognize mask wearers 95 percent of the time. China’s personal-data harvest even reaps from citizens who lack phones. Out in the countryside, villagers line up to have their faces scanned, from multiple angles, by private firms in exchange for cookware.

Until recently, it was difficult to imagine how China could integrate all of these data into a single surveillance system, but no longer. In 2018, a cybersecurity activist hacked into a facial-recognition system that appeared to be connected to the government and was synthesizing a surprising combination of data streams. The system was capable of detecting Uighurs by their ethnic features, and it could tell whether people’s eyes or mouth were open, whether they were smiling, whether they had a beard, and whether they were wearing sunglasses. It logged the date, time, and serial numbers—all traceable to individual users—of Wi-Fi-enabled phones that passed within its reach. It was hosted by Alibaba and made reference to City Brain, an AI-powered software platform that China’s government has tasked the company with building.

[Read: China’s artificial-intelligence boom]

City Brain is, as the name suggests, a kind of automated nerve center, capable of synthesizing data streams from a multitude of sensors distributed throughout an urban environment. Many of its proposed uses are benign technocratic functions. Its algorithms could, for instance, count people and cars, to help with red-light timing and subway-line planning. Data from sensor-laden trash cans could make waste pickup more timely and efficient.

But City Brain and its successor technologies will also enable new forms of integrated surveillance. Some of these will enjoy broad public support: City Brain could be trained to spot lost children, or luggage abandoned by tourists or terrorists. It could flag loiterers, or homeless people, or rioters. Anyone in any kind of danger could summon help by waving a hand in a distinctive way that would be instantly recognized by ever-vigilant computer vision. Earpiece-wearing police officers could be directed to the scene by an AI voice assistant.

City Brain would be especially useful in a pandemic. (One of Alibaba’s sister companies created the app that color-coded citizens’ disease risk, while silently sending their health and travel data to police.) As Beijing’s outbreak spread, some malls and restaurants in the city began scanning potential customers’ phones, pulling data from mobile carriers to see whether they’d recently traveled. Mobile carriers also sent municipal governments lists of people who had come to their city from Wuhan, where the coronavirus was first detected. And Chinese AI companies began making networked facial-recognition helmets for police, with built-in infrared fever detectors, capable of sending data to the government. City Brain could automate these processes, or integrate its data streams.

Even China’s most complex AI systems are still brittle. City Brain hasn’t yet fully integrated its range of surveillance capabilities, and its ancestor systems have suffered some embarrassing performance issues: In 2018, one of the government’s AI-powered cameras mistook a face on the side of a city bus for a jaywalker. But the software is getting better, and there’s no technical reason it can’t be implemented on a mass scale.

The data streams that could be fed into a City Brain–like system are essentially unlimited. In addition to footage from the 1.9 million facial-recognition cameras that the Chinese telecom firm China Tower is installing in cooperation with SenseTime, City Brain could absorb feeds from cameras fastened to lampposts and hanging above street corners. It could make use of the cameras that Chinese police hide in traffic cones, and those strapped to officers, both uniformed and plainclothes. The state could force retailers to provide data from in-store cameras, which can now detect the direction of your gaze across a shelf, and which could soon see around corners by reading shadows. Precious little public space would be unwatched.

America’s police departments have begun to avail themselves of footage from Amazon’s home-security cameras. In their more innocent applications, these cameras adorn doorbells, but many are also aimed at neighbors’ houses. China’s government could harvest footage from equivalent Chinese products. They could tap the cameras attached to ride-share cars, or the self-driving vehicles that may soon replace them: Automated vehicles will be covered in a whole host of sensors, including some that will take in information much richer than 2-D video. Data from a massive fleet of them could be stitched together, and supplemented by other City Brain streams, to produce a 3-D model of the city that’s updated second by second. Each refresh could log every human’s location within the model. Such a system would make unidentified faces a priority, perhaps by sending drone swarms to secure a positive ID.

The model’s data could be time-synced to audio from any networked device with a microphone, including smart speakers, smartwatches, and less obvious Internet of Things devices like smart mattresses, smart diapers, and smart sex toys. All of these sources could coalesce into a multitrack, location-specific audio mix that could be parsed by polyglot algorithms capable of interpreting words spoken in thousands of tongues. This mix would be useful to security services, especially in places without cameras: China’s iFlytek is perfecting a technology that can recognize individuals by their “voiceprint.”

In the decades to come, City Brain or its successor systems may even be able to read unspoken thoughts. Drones can already be controlled by helmets that sense and transmit neural signals, and researchers are now designing brain-computer interfaces that go well beyond autofill, to allow you to type just by thinking. An authoritarian state with enough processing power could force the makers of such software to feed every blip of a citizen’s neural activity into a government database. China has recently been pushing citizens to download and use a propaganda app. The government could use emotion-tracking software to monitor reactions to a political stimulus within an app. A silent, suppressed response to a meme or a clip from a Xi speech would be a meaningful data point to a precog algorithm.

All of these time-synced feeds of on-the-ground data could be supplemented by footage from drones, whose gigapixel cameras can record whole cityscapes in the kind of crystalline detail that allows for license-plate reading and gait recognition. “Spy bird” drones already swoop and circle above Chinese cities, disguised as doves. City Brain’s feeds could be synthesized with data from systems in other urban areas, to form a multidimensional, real-time account of nearly all human activity within China. Server farms across China will soon be able to hold multiple angles of high-definition footage of every moment of every Chinese person’s life.

It’s important to stress that systems of this scope are still in development. Most of China’s personal data are not yet integrated together, even within individual companies. Nor does China’s government have a one-stop data repository, in part because of turf wars between agencies. But there are no hard political barriers to the integration of all these data, especially for the security state’s use. To the contrary, private firms are required, by formal statute, to assist China’s intelligence services.

The government might soon have a rich, auto-populating data profile for all of its 1 billion–plus citizens. Each profile would comprise millions of data points, including the person’s every appearance in surveilled space, as well as all of her communications and purchases. Her threat risk to the party’s power could constantly be updated in real time, with a more granular score than those used in China’s pilot “social credit” schemes, which already aim to give every citizen a public social-reputation score based on things like social-media connections and buying habits. Algorithms could monitor her digital data score, along with everyone else’s, continuously, without ever feeling the fatigue that hit Stasi officers working the late shift. False positives—deeming someone a threat for innocuous behavior—would be encouraged, in order to boost the system’s built-in chilling effects, so that she’d turn her sharp eyes on her own behavior, to avoid the slightest appearance of dissent.

If her risk factor fluctuated upward—whether due to some suspicious pattern in her movements, her social associations, her insufficient attention to a propaganda-consumption app, or some correlation known only to the AI—a purely automated system could limit her movement. It could prevent her from purchasing plane or train tickets. It could disallow passage through checkpoints. It could remotely commandeer “smart locks” in public or private spaces, to confine her until security forces arrived.

In recent years, a few members of the Chinese intelligentsia have sounded the warning about misused AI, most notably the computer scientist Yi Zeng and the philosopher Zhao Tingyang. In the spring of 2019, Yi published “The Beijing AI Principles,” a manifesto on AI’s potential to interfere with autonomy, dignity, privacy, and a host of other human values.

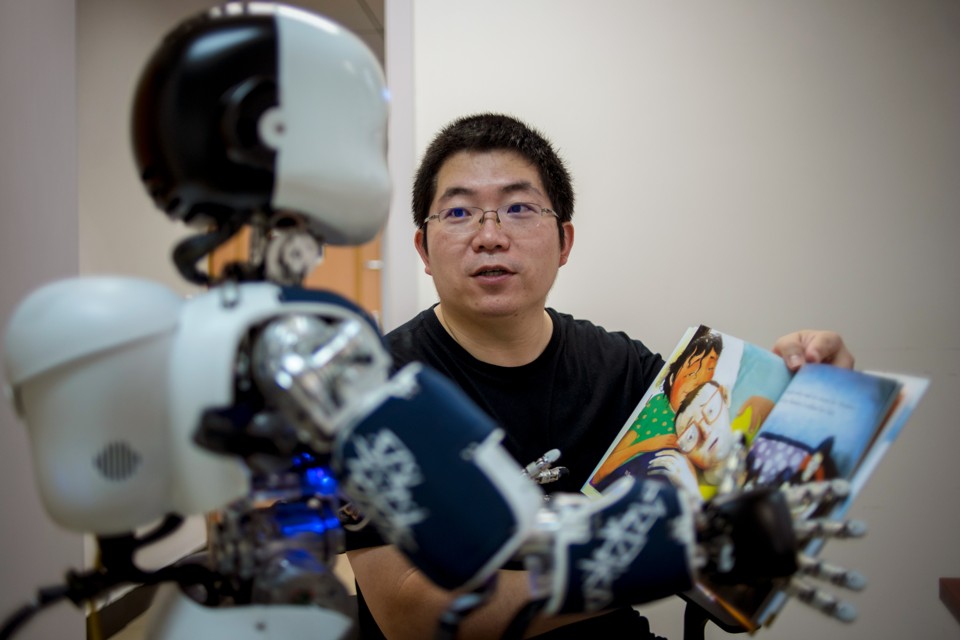

It was Yi whom I’d come to visit at Beijing’s Institute of Automation, where, in addition to his work on AI ethics, he serves as the deputy director of the Research Center for Brain-Inspired Intelligence. He retrieved me from the lobby. Yi looked young for his age, 37, with kind eyes and a solid frame slimmed down by black sweatpants and a hoodie.

On the way to Yi’s office, we passed one of his labs, where a research assistant hovered over a microscope, watching electrochemical signals flash neuron-to-neuron through mouse-brain tissue. We sat down at a long table in a conference room adjoining his office, taking in the gray, fogged-in cityscape while his assistant fetched tea.

I asked Yi how “The Beijing AI Principles” had been received. “People say, ‘This is just an official show from the Beijing government,’ ” he told me. “But this is my life’s work.”

Yi talked freely about AI’s potential misuses. He mentioned a project deployed to a select group of Chinese schools, where facial recognition was used to track not just student attendance but also whether individual students were paying attention.

“I hate that software,” Yi said. “I have to use that word: hate.”

He went on like this for a while, enumerating various unethical applications of AI. “I teach a course on the philosophy of AI,” he said. “I tell my students that I hope none of them will be involved in killer robots. They have only a short time on Earth. There are many other things they could be doing with their future.”

Yi clearly knew the academic literature on tech ethics cold. But when I asked him about the political efficacy of his work, his answers were less compelling.

“Many of us technicians have been invited to speak to the government, and even to Xi Jinping, about AI’s potential risks,” he said. “But the government is still in a learning phase, just like other governments worldwide.”

“Do you have anything stronger than that consultative process?” I asked. “Suppose there are times when the government has interests that are in conflict with your principles. What mechanism are you counting on to win out?”

“I, personally, am still in a learning phase on that problem,” Yi said.

Chinese AI start-ups aren’t nearly as bothered. Several are helping Xi develop AI for the express purpose of surveillance. The combination of China’s single-party rule and the ideological residue of central planning makes party elites powerful in every domain, especially the economy. But in the past, the connection between the government and the tech industry was discreet. Recently, the Chinese government started assigning representatives to tech firms, to augment the Communist Party cells that exist within large private companies.

Selling to the state security services is one of the fastest ways for China’s AI start-ups to turn a profit. A national telecom firm is the largest shareholder of iFlytek, China’s voice-recognition giant. Synergies abound: When police use iFlytek’s software to monitor calls, state-owned newspapers provide favorable coverage. Earlier this year, the personalized-news app Toutiao went so far as to rewrite its mission to articulate a new animating goal: aligning public opinion with the government’s wishes. Xu Li, the CEO of SenseTime, recently described the government as his company’s “largest data source.”

Whether any private data can be ensured protection in China isn’t clear, given the country’s political structure. The digital revolution has made data monopolies difficult to avoid. Even in America, which has a sophisticated tradition of antitrust enforcement, the citizenry has not yet summoned the will to force information about the many out of the hands of the powerful few. But private data monopolies are at least subject to the sovereign power of the countries where they operate. A nation-state’s data monopoly can be prevented only by its people, and only if they possess sufficient political power.

China’s people can’t use an election to rid themselves of Xi. And with no independent judiciary, the government can make an argument, however strained, that it ought to possess any information stream, so long as threats to “stability” could be detected among the data points. Or it can demand data from companies behind closed doors, as happened during the initial coronavirus outbreak. No independent press exists to leak news of these demands to.

Each time a person’s face is recognized, or her voice recorded, or her text messages intercepted, this information could be attached, instantly, to her government-ID number, police records, tax returns, property filings, and employment history. It could be cross-referenced with her medical records and DNA, of which the Chinese police boast they have the world’s largest collection.

Yi and I talked through a global scenario that has begun to worry AI ethicists and China-watchers alike. In this scenario, most AI researchers around the world come to recognize the technology’s risks to humanity, and develop strong norms around its use. All except for one country, which makes the right noises about AI ethics, but only as a cover. Meanwhile, this country builds turnkey national surveillance systems, and sells them to places where democracy is fragile or nonexistent. The world’s autocrats are usually felled by coups or mass protests, both of which require a baseline of political organization. But large-scale political organization could prove impossible in societies watched by pervasive automated surveillance.

Yi expressed worry about this scenario, but he did not name China specifically. He didn’t have to: The country is now the world’s leading seller of AI-powered surveillance equipment. In Malaysia, the government is working with Yitu, a Chinese AI start-up, to bring facial-recognition technology to Kuala Lumpur’s police as a complement to Alibaba’s City Brain platform. Chinese companies also bid to outfit every one of Singapore’s 110,000 lampposts with facial-recognition cameras.

In South Asia, the Chinese government has supplied surveillance equipment to Sri Lanka. On the old Silk Road, the Chinese company Dahua is lining the streets of Mongolia’s capital with AI-assisted surveillance cameras. Farther west, in Serbia, Huawei is helping set up a “safe-city system,” complete with facial-recognition cameras and joint patrols conducted by Serbian and Chinese police aimed at helping Chinese tourists to feel safe.

In the early aughts, the Chinese telecom titan ZTE sold Ethiopia a wireless network with built-in backdoor access for the government. In a later crackdown, dissidents were rounded up for brutal interrogations, during which they were played audio from recent phone calls they’d made. Today, Kenya, Uganda, and Mauritius are outfitting major cities with Chinese-made surveillance networks.

In Egypt, Chinese developers are looking to finance the construction of a new capital. It’s slated to run on a “smart city” platform similar to City Brain, although a vendor has not yet been named. In southern Africa, Zambia has agreed to buy more than $1 billion in telecom equipment from China, including internet-monitoring technology. China’s Hikvision, the world’s largest manufacturer of AI-enabled surveillance cameras, has an office in Johannesburg.

China uses “predatory lending to sell telecommunications equipment at a significant discount to developing countries, which then puts China in a position to control those networks and their data,” Michael Kratsios, America’s CTO, told me. When countries need to refinance the terms of their loans, China can make network access part of the deal, in the same way that its military secures base rights at foreign ports it finances. “If you give [China] unfettered access to data networks around the world, that could be a serious problem,” Kratsios said.

In 2018, CloudWalk Technology, a Guangzhou-based start-up spun out of the Chinese Academy of Sciences, inked a deal with the Zimbabwean government to set up a surveillance network. Its terms require Harare to send images of its inhabitants—a rich data set, given that Zimbabwe has absorbed migration flows from all across sub-Saharan Africa—back to CloudWalk’s Chinese offices, allowing the company to fine-tune its software’s ability to recognize dark-skinned faces, which have previously proved tricky for its algorithms.

Having set up beachheads in Asia, Europe, and Africa, China’s AI companies are now pushing into Latin America, a region the Chinese government describes as a “core economic interest.” China financed Ecuador’s $240 million purchase of a surveillance-camera system. Bolivia, too, has bought surveillance equipment with help from a loan from Beijing. Venezuela recently debuted a new national ID-card system that logs citizens’ political affiliations in a database built by ZTE. In a grim irony, for years Chinese companies hawked many of these surveillance products at a security expo in Xinjiang, the home province of the Uighurs.

If China is able to surpass America in AI, it will become a more potent geopolitical force, especially as the standard-bearer of a new authoritarian alliance.

China already has some of the world’s largest data sets to feed its AI systems, a crucial advantage for its researchers. In cavernous mega-offices in cities across the country, low-wage workers sit at long tables for long hours, transcribing audio files and outlining objects in images, to make the data generated by China’s massive population more useful. But for the country to best America’s AI ecosystem, its vast troves of data will have to be sifted through by algorithms that recognize patterns well beyond those grasped by human insight. And even executives at China’s search giant Baidu concede that the top echelon of AI talent resides in the West.

Historically, China struggled to retain elite quants, most of whom left to study in America’s peerless computer-science departments, before working at Silicon Valley’s more interesting, better-resourced companies. But that may be changing. The Trump administration has made it difficult for Chinese students to study in the United States, and those who are able to are viewed with suspicion. A leading machine-learning scientist at Google recently described visa restrictions as “one of the largest bottlenecks to our collective research productivity.”

Meanwhile, Chinese computer-science departments have gone all-in on AI. Three of the world’s top 10 AI universities, in terms of the volume of research they publish, are now located in China. And that’s before the country finishes building the 50 new AI research centers mandated by Xi’s “AI Innovation Action Plan for Institutions of Higher Education.” Chinese companies attracted 36 percent of global AI private-equity investment in 2017, up from just 3 percent in 2015. Talented Chinese engineers can stay home for school and work for a globally sexy homegrown company like TikTok after graduation.

China will still lag behind America in computing hardware in the near term. Just as data must be processed by algorithms to be useful, algorithms must be instantiated in physical strata—specifically, in the innards of microchips. These gossamer silicon structures are so intricate that a few missing atoms can reroute electrical pulses through the chips’ neuronlike switches. The most sophisticated chips are arguably the most complex objects yet built by humans. They’re certainly too complex to be quickly pried apart and reverse-engineered by China’s vaunted corporate-espionage artists.

Chinese firms can’t yet build the best of the best chip-fabrication rooms, which cost billions of dollars and rest on decades of compounding institutional knowledge. Nitrogen-cooled and seismically isolated, to prevent a passing truck’s rumble from ruining a microchip in vitro, these automated rooms are as much a marvel as their finished silicon wafers. And the best ones are still mostly in the United States, Western Europe, Japan, South Korea, and Taiwan.

America’s government is still able to limit the hardware that flows into China, a state of affairs that the Communist Party has come to resent. When the Trump administration banned the sale of microchips to ZTE in April 2018, Frank Long, an analyst who specializes in China’s AI sector, described it as a wake-up call for China on par with America’s experience of the Arab oil embargo.

But the AI revolution has dealt China a rare leapfrogging opportunity. Until recently, most chips were designed with flexible architecture that allows for many types of computing operations. But AI runs fastest on custom chips, like those Google uses for its cloud computing to instantly spot your daughter’s face in thousands of photos. (Apple performs many of these operations on the iPhone with a custom neural-engine chip.) Because everyone is making these custom chips for the first time, China isn’t as far behind: Baidu and Alibaba are building chips customized for deep learning. And in August 2019, Huawei unveiled a mobile machine-learning chip. Its design came from Cambricon, perhaps the global chip-making industry’s most valuable start-up, which was founded by Yi’s colleagues at the Chinese Academy of Sciences.

By 2030, AI supremacy might be within range for China. The country will likely have the world’s largest economy, and new money to spend on AI applications for its military. It may have the most sophisticated drone swarms. It may have autonomous weapons systems that can forecast an adversary’s actions after a brief exposure to a theater of war, and make battlefield decisions much faster than human cognition allows. Its missile-detection algorithms could void America’s first-strike nuclear advantage. AI could upturn the global balance of power.

On my way out of the Institute of Automation, Yi took me on a tour of his robotics lab. In the high-ceilinged room, grad students fiddled with a giant disembodied metallic arm and a small humanoid robot wrapped in a gray exoskeleton while Yi told me about his work modeling the brain. He said that understanding the brain’s structure was the surest way to understand the nature of intelligence.

I asked Yi how the future of AI would unfold. He said he could imagine software modeled on the brain acquiring a series of abilities, one by one. He said it could achieve some semblance of self-recognition, and then slowly become aware of the past and the future. It could develop motivations and values. The final stage of its assisted evolution would come when it understood other agents as worthy of empathy.

I asked him how long this process would take.

“I think such a machine could be built by 2030,” Yi said.

Before bidding Yi farewell, I asked him to imagine things unfolding another way. “Suppose you finish your digital, high-resolution model of the brain,” I said. “And suppose it attains some rudimentary form of consciousness. And suppose, over time, you’re able to improve it, until it outperforms humans in every cognitive task, with the exception of empathy. You keep it locked down in safe mode until you achieve that last step. But then one day, the government’s security services break down your office door. They know you have this AI on your computer. They want to use it as the software for a new hardware platform, an artificial humanoid soldier. They’ve already manufactured a billion of them, and they don’t give a damn if they’re wired with empathy. They demand your password. Do you give it to them?”

“I would destroy my computer and leave,” Yi said.

“Really?” I replied.

“Yes, really,” he said. “At that point, it would be time to quit my job and go focus on robots that create art.”

If you were looking for a philosopher-king to chart an ethical developmental trajectory for AI, you could do worse than Yi. But the development path of AI will be shaped by overlapping systems of local, national, and global politics, not by a wise and benevolent philosopher-king. That’s why China’s ascent to AI supremacy is such a menacing prospect: The country’s political structure encourages, rather than restrains, this technology’s worst uses.

Even in the U.S., a democracy with constitutionally enshrined human rights, Americans are struggling mightily to prevent the emergence of a public-private surveillance state. But at least America has political structures that stand some chance of resistance. In China, AI will be restrained only according to the party’s needs.

It was nearly noon when I finally left the institute. The day’s rain was in its last hour. Yi ordered me a car and walked me to meet it, holding an umbrella over my head. I made my way to the Forbidden City, Beijing’s historic seat of imperial power. Even this short trip to the city center brought me into contact with China’s surveillance state. Before entering Tiananmen Square, both my passport and my face were scanned, an experience I was becoming numb to.

In the square itself, police holding body-size bulletproof shields jogged in single-file lines, weaving paths through throngs of tourists. The heavy police presence was a chilling reminder of the student protesters who were murdered here in 1989. China’s AI-patrolled Great Firewall was built, in part, to make sure that massacre is never discussed on its internet. To dodge algorithmic censors, Chinese activists rely on memes—Tank Man approaching a rubber ducky—to commemorate the students’ murder.

The party’s AI-powered censorship extends well beyond Tiananmen. Earlier this year, the government arrested Chinese programmers who were trying to preserve disappeared news stories about the coronavirus pandemic. Some of the articles in their database were banned because they were critical of Xi and the party. They survived only because internet users reposted them on social media, interlaced with coded language and emojis designed to evade algorithms. Work-arounds of this sort are short-lived: Xi’s domestic critics used to make fun of him with images of Winnie the Pooh, but those too are now banned in China. The party’s ability to edit history and culture, by force, will become more sweeping and precise, as China’s AI improves.

Wresting power from a government that so thoroughly controls the information environment will be difficult. It may take a million acts of civil disobedience, like the laptop-destroying scenario imagined by Yi. China’s citizens will have to stand with their students. Who can say what hardships they may endure?

China’s citizens don’t yet seem to be radicalized against surveillance. The pandemic may even make people value privacy less, as one early poll in the U.S. suggests. So far, Xi is billing the government’s response as a triumphant “people’s war,” another old phrase from Mao, referring to the mobilization of the whole population to smash an invading force. The Chinese people may well be more pliant now than they were before the virus.

But evidence suggests that China’s young people—at least some of them—resented the government’s initial secrecy about the outbreak. For all we know, some new youth movement on the mainland is biding its time, waiting for the right moment to make a play for democracy. The people of Hong Kong certainly sense the danger of this techno-political moment. The night before I arrived in China, more than 1 million protesters had poured into the island’s streets. (The free state newspaper in my Beijing hotel described them, falsely, as police supporters.) A great many held umbrellas over their heads, in solidarity with student protesters from years prior, and to keep their faces hidden. A few tore down a lamppost on the suspicion that it contained a facial-recognition camera. Xi has since tightened his grip on the region with a “national-security law,” and there is little that outnumbered Hong Kongers can do about it, at least not without help from a movement on the mainland.

During my visit to Tiananmen Square, I didn’t see any protesters. People mostly milled about peacefully, posing for selfies with the oversize portrait of Mao. They held umbrellas, but only to keep the August sun off their faces. Walking in their midst, I kept thinking about the contingency of history: The political systems that constrain a technology during its early development profoundly shape our shared global future. We have learned this from our adventures in carbon-burning. Much of the planet’s political trajectory may depend on just how dangerous China’s people imagine AI to be in the hands of centralized power. Until they secure their personal liberty, at some unimaginable cost, free people everywhere will have to hope against hope that the world’s most intelligent machines are made elsewhere.

This article appears in the September 2020 print edition with the headline “When China Sees All.”

from Technology | The Atlantic https://ift.tt/2CWsgkw

from Technology | The Atlantic https://ift.tt/2CWsgkwvia IFTTT

Comments

Post a Comment